Observing traffic prioritization in Comcast's network

14 May 2012Even if you don’t make it all the way through this long post, take a look at the graphs below. I think they demonstrate reasonable evidence of the prioritization of traffic in violation of the terms of the Comcast/NBCU Consent Decree.

—

Thank you to everyone who commented on my previous post here and elsewhere. Much of the feedback I received was along a few related lines:

- The DiffServ field (which contains a packet’s DSCP bits) is defined in RFC 2474. This RFC does not prescribe any specific treatment or priority to be associated with each DSCP value, so the specific values themselves do not necessarily indicate prioritization.

- Many transit providers’ routers ignore DSCP values when making routing and queuing decisions.

- DOCSIS QoS is much more complex and dynamic than the standard IP QoS features available on most routers. Under ideal conditions, DOCSIS 3.0 offers the ability to segregate specific traffic flows onto dedicated RF spectrum, so it’s possible Comcast isn’t sharing any RF spectrum between their supposed Xbox VoD service and regular Internet traffic.

All of these points are true. Comcast is the United States’ largest MSO. They operate a national IP network over which nearly all of the services they provide are delivered. Comcast openly admits to prioritizing certain kinds of traffic—like their digital voice product. It’s clear that some configuration is in place to do so. And their practice of rewriting DSCP values at the edge of their network is a strong indicator that those values have meaning to Comcast’s routers.

I also omitted any discussion of DOCSIS QoS in my previous post. My theory was that certain DSCP values signal the CMTS to map packets into different DOCSIS QoS classes. In addition, one reader of my previous post, HN commenter tonyb, suggested that Comcast might be using PacketCable Multimedia to dynamically prioritize streams and rightly pointed out that DOCSIS 3.0 offered the ability to segregate certain traffic flows onto dedicated RF spectrum. I decided it was time to dig into the CableLabs specifications to get answers and devise a set of experiments to determine whether my hypotheses were correct.

What I’ve concluded is that Comcast is using separate DOCSIS service flows to prioritize the traffic to the Xfinity Xbox app (so that I’m using consistent terminology, I’m going to call this traffic “Xfinity traffic” in the rest of the post). This separation allows them to exempt that traffic from both bandwidth cap accounting and download speed limits. It’s still plain-old HTTP delivering MP4-encoded video files, just like the other streaming services use, but additional priority is granted to the Xfinity traffic at the DOCSIS level. I still believe that DSCP values I observed in the packet headers of Xfinity traffic is the method by which Comcast signals that traffic is to be prioritized, both in their backbone and regional networks and their DOCSIS network. In addition, contrary to what has been widely speculated, the Xfinity traffic is not delivered via separate, dedicated downstream channel(s)—it uses the same downstream channels as regular Internet traffic.

As my argument about Comcast’s cost basis between streams delivered by third-party CDNs and Comcast’s “internal” CDN, this comment thread from HN commenter zbisch pointed out that I didn’t quite include enough context in my last post. To summarize: it’s rumored that all of the major CDNs (Akamai, Limelight and post-interconnection-dispute Level 3) actually pay Comcast to carry their traffic to end users. The capital and operational expenses of operating the streaming servers are borne by the third-party CDNs. The last-mile capacity required is bit-for-bit equal between third-party CDN and internal CDN. To deliver a stream from Seattle, Comcast has to haul the bits further and through more expensive router ports than a stream delivered from a third-party CDN. Hence my conclusion that, from a financial perspective, it is not cheaper for Comcast to deliver streams from their internal CDN.

Background—DOCSIS downstream QoS

Since DOCSIS 1.1, the cable standard has supported “service flows” per cable modem. A service flow is a class of traffic with an assigned priority. Until DOCSIS 3.0 was introduced, a cable modem could only attach to one 6 MHz downstream channel at a time. Prior to this, all service flows shared the same RF channel, but the CMTS would prefer higher priority service flows when scheduling downstream bandwidth. DOCSIS 3.0’s high speeds were enabled by allowing downstream modems to bond several channels together at one time in Downstream Bonding Groups. In addition, the DOCSIS 3.0 specification allows for cable operators to map certain service flows to each downstream bonding group. So in the case where both the CMTS and cable modem support DOCSIS 3.0, it is possible to dedicate specific, full 6 MHz downstream channels to certain classes of traffic. As I will demonstrate below, Comcast has not configured the service flows which carry Xfinity traffic to use dedicated RF spectrum.

Background—Packet Cable Multimedia (PCMM)

So how does the Comcast network know how to prioritize these streams?

In a 2008 filing with the FCC in which Comcast detailed the implementation of its network management practices, it disclosed the deployment of PacketCable Multimedia (PCMM) throughout its High-Speed Internet (HSI) network. PCMM is a technology implemented in both the CMTS and a policy server which allows for very fine-grained dynamic adjustment of priority of traffic. The FCC filing disclosed the use of aggregated IPDR information as a signal to deprioritize ALL traffic to a congestion-causing end user. But instead of signaling traffic as high priority by using DSCP bits in IP headers, cable operator-blessed applications can request prioritization of specific traffic by making communicating with the PCMM policy server. The PCMM server does this by pushing a “gate” to the customer’s CMTS. Each gate is a configuration record which maps a specific set of classifiers (which can be things like source IP address, source port, etc.) to a DOCSIS service flow.

While my observations lead me to believe that Comcast is mapping traffic into separate service flows solely based upon DSCP values, it is possible that they have integrated the PCMM infrastructure they built for network management into this streaming service. It’s also possible that they have deployed a DPI solution for observing and prioritizing their own video traffic, but that seems as if it would be inefficient and unreliable.

Observations

1. Xfinity traffic uses the same RF spectrum as public Internet traffic, thus it is not “separated” for the purposes of prioritization

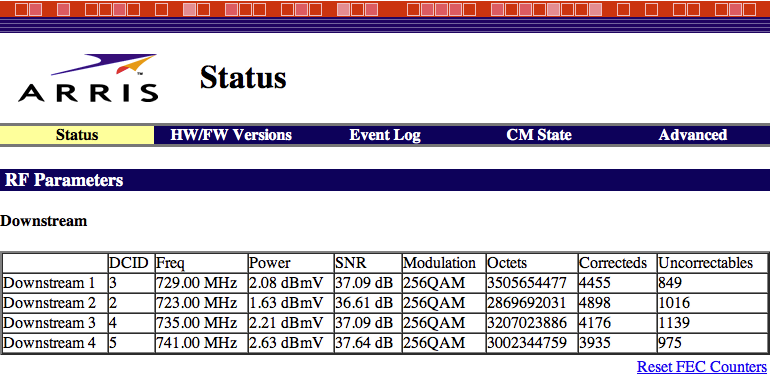

The DOCSIS spec mandates that a simple customer-facing read-only management interface be made available at a well-known IP (which happens to be 192.168.100.1). The cable modem at my house, an Arris TM702G, exposes information via a tiny web server on this IP. (Try it, actually: If you’re on a cable connection right now, go to http://192.168.100.1/ and see your modem’s status.) One of the pieces of diagnostic information that my cable modem exposes is a count of the number of bytes received per downstream channel. Here’s a screenshot of that it looks like:

If Comcast was using dedicated downstream channels to deliver Xfinity traffic, only the “Octets” field(s) associated with those dedicated channels would increase when streaming video in the Xfinity Xbox app. Instead, like any other Internet traffic, the Xfinity traffic is directly observable to be balanced (approximately) evenly across all 4 of the downstream channels my modem has attached to.

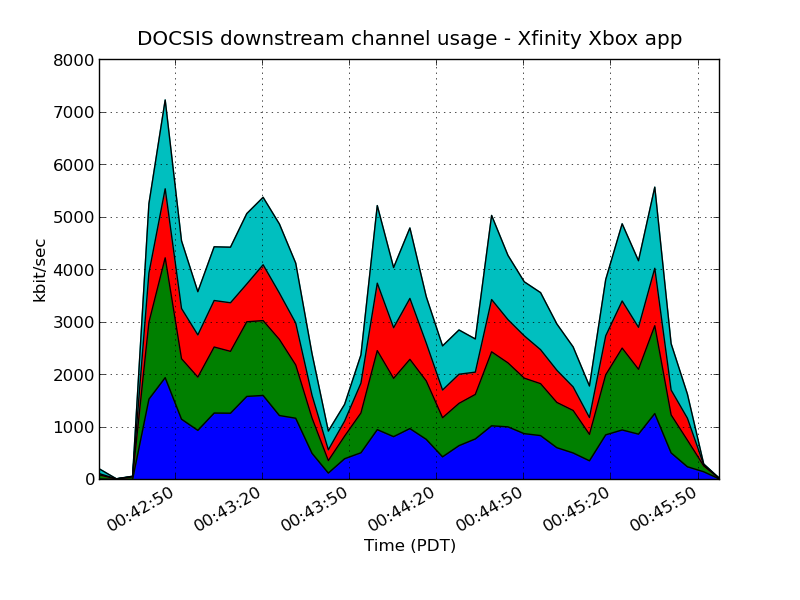

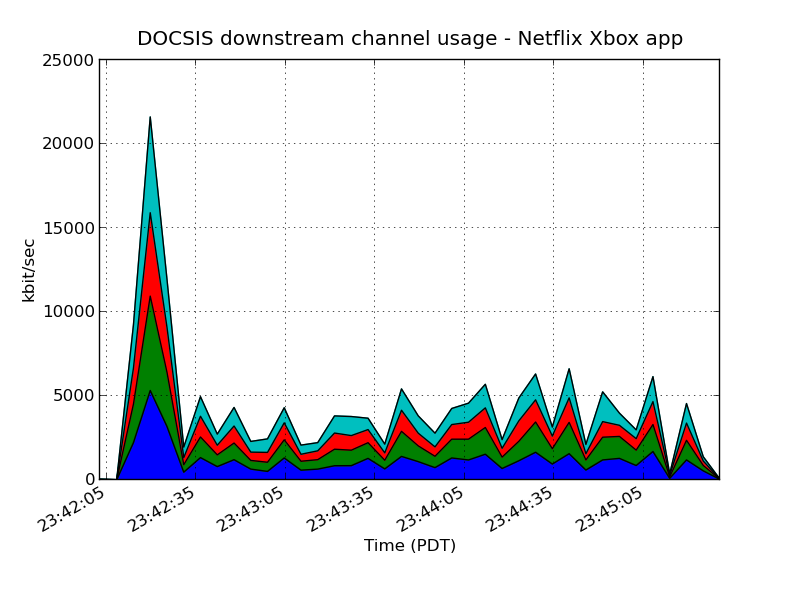

I wrote a quick Python script to periodically poll my cable modem’s per-channel counters every five seconds and calculate the effective bandwidth of each channel. While running this script, I opened the Netflix app on my Xbox and started a stream. Then I closed the app, waited a little bit for usage to drop, then started a stream in the Xfinity app. Here’s a graph of the both the total bandwidth usage and individual downstream channel usage for both an Xfinity stream (top) and then a Netflix stream (bottom). As you can see, traffic usage was roughly equivalent and spread across each channel.

In the interest of being fully transparent, here’s the script I used to log the counter values. If you have a similar modem, you should be able to run this script and duplicate my results.

2. Xfinity traffic is prioritized over other Internet traffic and is exempt from rate limiting

I wanted to be able to observe Comcast’s traffic prioritization in action, but in the absence of actual traffic congestion, prioritization is impossible to observe, so I created a way to simulate heavy usage of my broadband connection. I wrote a short Python script which was able to saturate my Internet service’s bandwidth allocation by generating traffic—specifically by starting 24 simultaneous, repeated concurrent downloads of the an archive containing a copy of the Google Chrome web browser, direct from Google’s CDN. It’s important to note that because of TCP’s congestion avoidance algorithms, the simulated congestion did not affect anyone else’s broadband service, nor did it cause any kind of backbone congestion in Comcast’s network. It merely caused the traffic destined to my cable modem to be policed like any other download stream.

Why run 24 simultaneous downloads, then? In order to keep a single flow from unfairly using all of a downstream link’s bandwidth, a CMTS often will employ a fair-queuing algorithm to determine which packets to drop when a customer is exceeding their rate limit. This ensures that multiple flows of equal priority share the available downstream bandwidth equally. Because I have such a high service tier (download speed), simulating fewer downloads would not sufficiently degrade the available bandwidth for the purposes of observation. If I had a much lower service tier, fewer simultaneous downloads would be required.

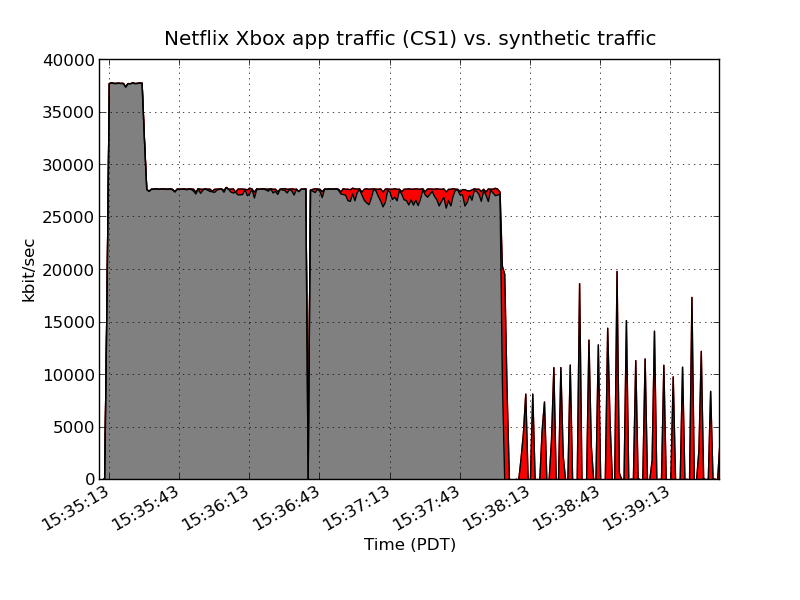

With the congestion script written, I wrote a script to poll the interface counters of a managed switch via SNMP every second. I hooked the Xbox and the computer generating the download requests for the synthetic traffic up to separate Ethernet ports on this switch. From this script’s output, I was able to calculate both my total bandwidth usage and the amount of bandwidth used by the synthetic traffic (dark gray) and the video stream traffic (red) separately. I then tried to watch use Netflix and Xfinity streams while this synthetic traffic was being generated.

If I start a Netflix stream while the synthetic congestion is present, the video takes a long time to buffer and the quality is poor because of the contention for downstream bandwidth caused by the synthetic traffic. The plateau is me hitting my download speed rate limit. (My service tier is rated at 25 mbps. These measurements are of kbit/sec of actual Ethernet frames, not TCP payload. I believe that accounts for the difference.)

If I terminate the script generating the synthetic traffic (around 15:38:00), you’ll see that the bandwidth available to the Netflix app increases. When the Netflix app is not experiencing this congestion the video quality shows a corresponding improvement.

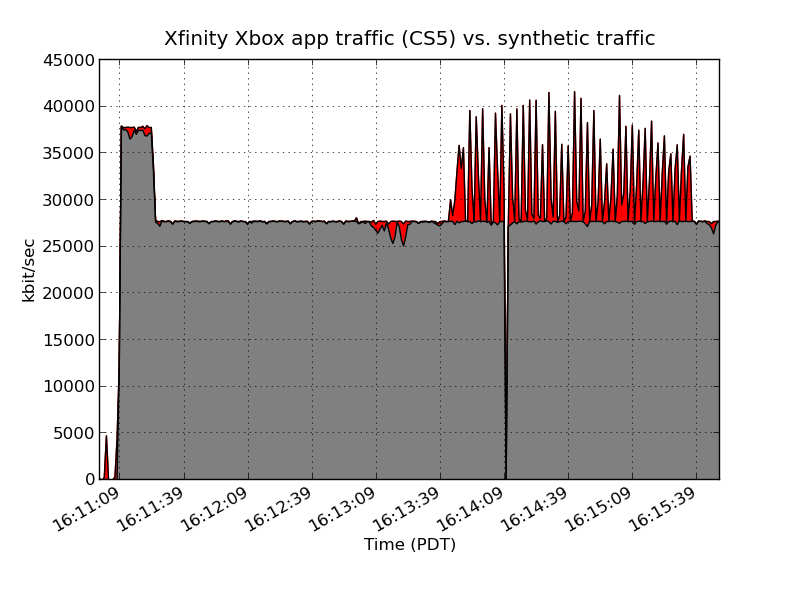

However, if I run the Xfinity app, the video quality is seemingly unaffected by the synthetic traffic. In addition, you’ll see that unlike the Netflix example, the amount of bandwidth available to Internet traffic is unaffected. Because the Xfinity traffic is mapped to a different DOCSIS service flow when prioritized, it is exempted from the downstream rate limit, so my total bandwidth usage stays consistently higher than my service tier would normally allow.

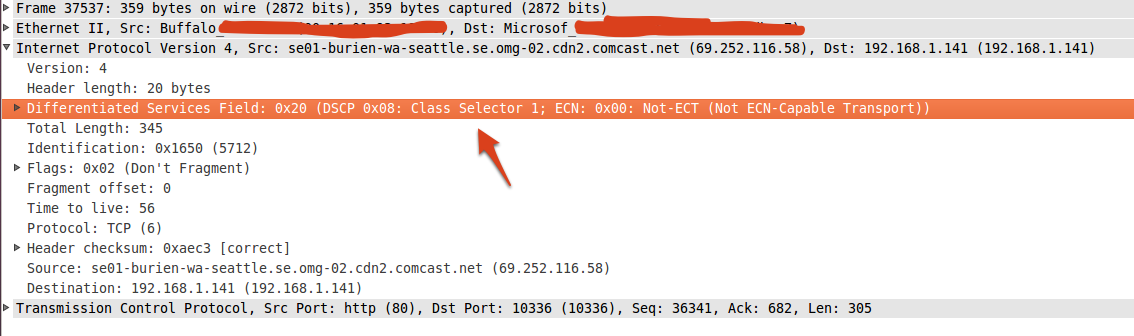

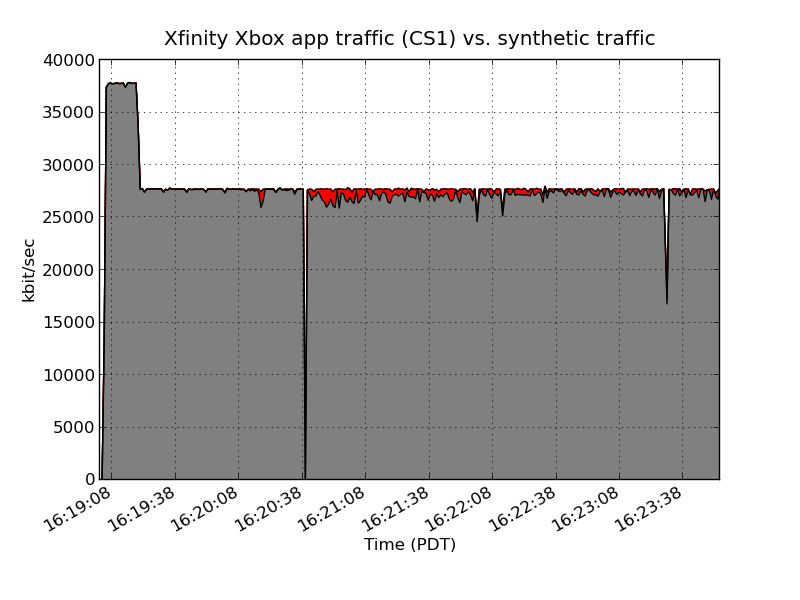

There’s one exception to the prioritization that I was able to find. It appears that Comcast distributes content to various CDN nodes inconsistently—after my original post went up, I began observing my content come from different nodes. I was finally seeing Comcast deliver content to me from local SF Bay Area CDN servers. All of this traffic was marked with the same CS5 DSCP value seen on the traffic from Seattle in my previous post, except for a single cache server in Burien, WA, a city outside Seattle. This one cache server was sending me traffic marked as CS1 (the same priority level as Netflix traffic), and *was* affected by the congestion caused by the synthetic traffic. This reinforces my conclusion that Comcast is using DSCP values to signal the prioritization of Xfinity at the DOCSIS MAC layer. Here’s a screenshot of one of the packets received, marked as CS1:

And here’s a similar graph as above. Since it’s not marked as CS5, it doesn’t get assigned to the same DOCSIS service flow and isn’t prioritized. Notice it looks more like the Netflix graph than the Xfinity one:

I’m willing to bet streams that come off this cache server count against your bandwidth cap, too (as the service flow ID will be wrong in the IPDR record that gets generated.)

Here’s the ugly script I wrote to log the counters to disk.

Conclusion

Hopefully these observations have served to provide some context for my previous post. If you have any constructive comments on the methodology used, I’d appreciate hearing them.

Thanks to my wonderful wife Lauren for putting up with me making a mess in the living room over the last week.